Let's learn about cross entropy

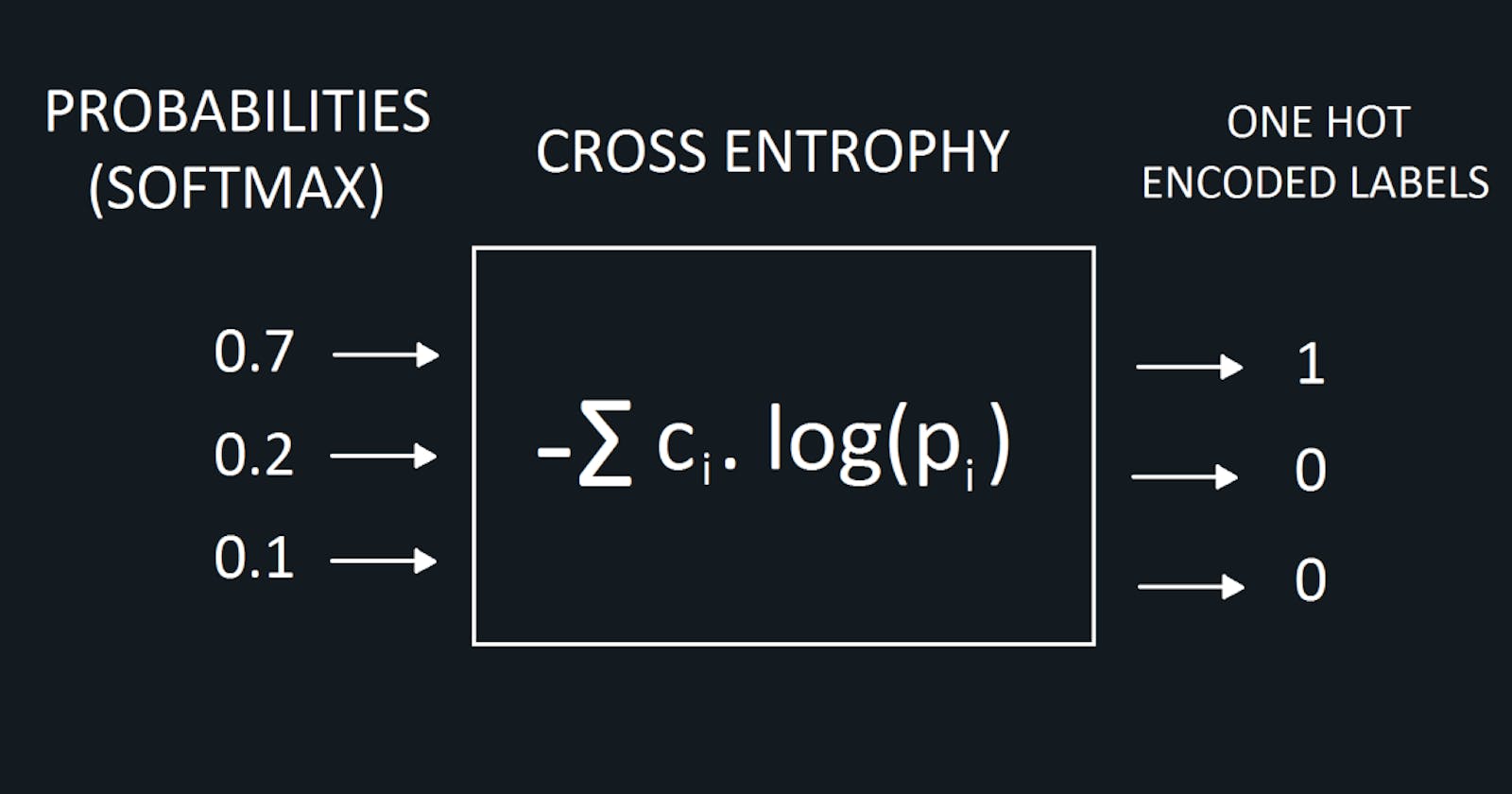

Cross-entropy can be used as a loss function for classification models like logistic regression or artificial neural networks.

It basically describes the loss between two probability distributions.

The "true" distribution (the one that your machine learning algorithm is trying to match) is expressed in terms of a one-hot encoding distribution.

Pr -> Probability of that label

Consider a specific training instance, with the true label as B

One hot encoding will be:

Pr(Class A) Pr(Class B) Pr(Class C) 0.0 1.0 0.0

Suppose your model predicts:

Pr(Class A) Pr(Class B) Pr(Class C) 0.228 0.619 0.153

Loss will be:

L = - ( 0.0 ln(0.228) + 1.0 ln(0.619) + 0.0 * ln(0.153) ) L = 0.479

That's how "wrong" or "far away" your prediction is from the true distribution.

Basically cross-entropy describes the loss between two probability distributions and it is among one of many possible loss functions.

Hope now you have understanding of what is Cross Entropy

Don't forget to like and share it.